A blog about some of my projects in augmented-reality, mixed-reality, home automation, electronics, computer vision, deep learning, and anything else I decide to experiment with.

Sunday 29 September 2013

Blog usage stats

This isn't related to my work just a random post with some interesting stats about blog visitors. Nothing else to say about it really so enjoy the pretty plots.

Location:

Milton Keynes, UK

The International Conference on Image Processing 2013

After attending my first international conference as a PhD student I thought I'd do a quick review of the event and the content.

Day 1 - Sunday 15th September

Day 2 - Monday 16th September

Now the conference kicks off properly and gets into full swing pretty quickly. There were oral presentations, tech demos and poster presentations all running in parallel. If you have a broad area of research you'll be darting from room-to-room trying to catch talks of interest. I'd recommend having a good look though the technical program and highlighting the ones of most interest and focus on attending those. It sounds obvious but trying to get round everything is impossible.

This is where the poster sessions are possible more useful as they are literally hanging round longer and it's easier to discuss the work face-to-face.

Monday was topped off with a workshop on VP9 from +Google presented by +Debargha Mukherjee. It wasn't really in my field but was an interesting insight into the performance of VP9, where it's going next and the process of software development at the big G!

Day 0 - Thursday 12th September

Australia is far away. 10,500 miles (16,898 km) that's 3 x 7 hr flights + 2 x flight changes. I can't wait for sub-orbital intercontinental flights to become routine! The +Boeing+ 777-300ER (Extended Range) +Emirates aircraft was impressive nonetheless. Equipped with a ~13" LCD touchscreen with a removal phone-sized touchscreen remote, flanked by a USB port for photo playback on the ICE (Information, Communication & Entertainment) system and a universal (110v/60Hz) power socket provided plenty of things to keep me busy.

|

| +Emirates ICE system |

|

| A view that never gets old |

The event kicked off for me on the Sunday afternoon with a tutorial on RGBD Image Processing for 3D Modeling and Texturing. The main focus of this session was depth information from structured light cameras, it was interesting to see some techniques being used to improve depth information from colour cameras. Hopefully I'll be able to use some of this in my research.

Sunday evening was the welcome reception. This was a great chance to mingle, chat and network. An open bar and good food facilitated this very well. Another attraction that provided a good talking point was the addition of a mini petting zoo... This was pretty surreal, I found myself in the middle of a conference center stroking a koala, kangaroo and a wombat.

|

Now the conference kicks off properly and gets into full swing pretty quickly. There were oral presentations, tech demos and poster presentations all running in parallel. If you have a broad area of research you'll be darting from room-to-room trying to catch talks of interest. I'd recommend having a good look though the technical program and highlighting the ones of most interest and focus on attending those. It sounds obvious but trying to get round everything is impossible.

This is where the poster sessions are possible more useful as they are literally hanging round longer and it's easier to discuss the work face-to-face.

Monday was topped off with a workshop on VP9 from +Google presented by +Debargha Mukherjee. It wasn't really in my field but was an interesting insight into the performance of VP9, where it's going next and the process of software development at the big G!

Day 3 - Tuesday 17th September

There's not much else I can say quickly, other than it was much the same as Monday, a busy schedule and plenty of things to attend. I'm not going to review all the work I saw, it would take far too long and not really provide anything of great use to anyone. If you are interested in the program it can be found here.

Tuesday evening was polished off with a banquet. It started with some pretty stereotypical aboriginal music and dancing being served with the first course which was "a taste of Australia". The main course of steak followed a rather odd combination of dance and technology, not really sure it was right for a geeky audience. Fillet steak is always a welcome meal in my books, but being honest it was overcooked for my liking, it's medium-rare or gtfo. Desert was accompanied by some more stereotypical music, this time by a folk-like band.

Day 4 - Wednesday 18th September

There's not much else I can say quickly, other than it was much the same as Monday, a busy schedule and plenty of things to attend. I'm not going to review all the work I saw, it would take far too long and not really provide anything of great use to anyone. If you are interested in the program it can be found here.

Tuesday evening was polished off with a banquet. It started with some pretty stereotypical aboriginal music and dancing being served with the first course which was "a taste of Australia". The main course of steak followed a rather odd combination of dance and technology, not really sure it was right for a geeky audience. Fillet steak is always a welcome meal in my books, but being honest it was overcooked for my liking, it's medium-rare or gtfo. Desert was accompanied by some more stereotypical music, this time by a folk-like band.

Day 4 - Wednesday 18th September

Finally it was almost time to present my work, unfortunately it was toward the end of the last day so inevitably the numbers started to dwindle a bit. This said, I did have some good discussions with quite a few people who I think were interested in the results presented or very good at feigning it. Below is the poster presented.

Summary

With such a broad range of topics being presented from medical imaging, radar, image enhancement, recognition and quality assessment it was easy to get mentally fatigued by trying to take it all in. My hint for anyone yet to attend one of these conferences is just decide on whats of interest and really concentrate on those. However, don't ditch everything else entirely, the poster session are invaluable for gaining insights into other areas of research that may be of interest but at least you can read them and absorb them at your own rate.

Labels:

3d mapping,

australia,

computer vision,

cranfield,

icip,

icip13,

machine learning,

opencv,

phd,

research,

stereo vision

Location:

Milton Keynes, UK

Thursday 29 August 2013

Meshlab Load Multiple

Quick post about something I've just found out about +MeshLab. I was quickly trying to search for a way to load in multiple point clouds from .ply files into meshlab from the command line.

I'm interested in doing this as I want to trigger meshlab to load a bunch of files from a link in PowerPoint.

To launch external programs/files from PowerPoint...

- Select the text you want to create the link with

- 'Insert Tab'

- In the 'Links' panel click on 'Action'

- Point 'Run program' towards the .exe of the program you wish to launch

With Meshlab it'll look something like this

C:\Program Files\VCG\MeshLab\meshlab.exe pointCloud.ply

The problem was I wanted to load in 3 .ply files. Doing the following just loads in the first point cloud.

C:\Program Files\VCG\MeshLab\meshlab.exe pointCloud1.ply pointCloud2.ply

So the easiest way I can see is to just use a Meshlab project file. You can either load all your point clouds into Meshlab then go to File > Save Project

This creates a .mlp file that contains the following...

Edit, with your favorite text editor, accordingly with all your .ply files. Then you can direct PowerPoint to

C:\Program Files\VCG\MeshLab\meshlab.exe "C:\work\stuff\projectfile.mlp"

I'm interested in doing this as I want to trigger meshlab to load a bunch of files from a link in PowerPoint.

To launch external programs/files from PowerPoint...

- Select the text you want to create the link with

- 'Insert Tab'

- In the 'Links' panel click on 'Action'

- Point 'Run program' towards the .exe of the program you wish to launch

With Meshlab it'll look something like this

C:\Program Files\VCG\MeshLab\meshlab.exe pointCloud.ply

The problem was I wanted to load in 3 .ply files. Doing the following just loads in the first point cloud.

C:\Program Files\VCG\MeshLab\meshlab.exe pointCloud1.ply pointCloud2.ply

So the easiest way I can see is to just use a Meshlab project file. You can either load all your point clouds into Meshlab then go to File > Save Project

This creates a .mlp file that contains the following...

<!DOCTYPE MeshLabDocument> <MeshLabProject> <MeshGroup> <MLMesh label="PC1.ply" filename="PC1.ply"> <MLMatrix44> 1 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 </MLMatrix44> </MLMesh> <MLMesh label="PC2.ply" filename="PC2.ply"> <MLMatrix44> 1 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 </MLMatrix44> </MLMesh> <MLMesh label="PC3.ply" filename="PC3.ply"> <MLMatrix44> 1 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 </MLMatrix44> </MLMesh> </MeshGroup> <RasterGroup/> </MeshLabProject>

Edit, with your favorite text editor, accordingly with all your .ply files. Then you can direct PowerPoint to

C:\Program Files\VCG\MeshLab\meshlab.exe "C:\work\stuff\projectfile.mlp"

Labels:

meshlab,

phd,

point clouds,

powerpoint,

windows

Location:

Milton Keynes, UK

Monday 19 August 2013

Playing with the OpenCV HoG Detector

So... long-time-no-post.

I've just taken my robot for a walk to collect some data, while I wait for the 40GB to copy I thought I'd do a quick post. A few months ago I was playing the HoG detector in +OpenCV and using the PETS2009 data set.

My idea was that I might create a home security system from a bunch of old bits of hardware that are gathering dust.

- 1 x HTC Magic

- 1 x HTC Desire

- 1 x Fat Gecko Suction Mount

- 1 x Old HP Laptop

The plan is to use the two devices as IP Cams using this nifty app. Using the CURL lib I can access the images from C++ really easily and start throwing them at OpenCV

I've just taken my robot for a walk to collect some data, while I wait for the 40GB to copy I thought I'd do a quick post. A few months ago I was playing the HoG detector in +OpenCV and using the PETS2009 data set.

My idea was that I might create a home security system from a bunch of old bits of hardware that are gathering dust.

- 1 x HTC Magic

- 1 x HTC Desire

- 1 x Fat Gecko Suction Mount

- 1 x Old HP Laptop

The plan is to use the two devices as IP Cams using this nifty app. Using the CURL lib I can access the images from C++ really easily and start throwing them at OpenCV

Here's a quick clip of the HoG detector running on the PETS09 data. It's not bad for an out-of-the-box solution.

OpenCV is available for Android so I could try running it directly on the handset, however the Magic isn't the most powerful, neither is the Desire; they are unlikely to be able to process the images as fast as even a beaten up old lappy.

Now that the images are being fed into OpenCV it's time to think about how I want to process them. Face recognition for the front door? Build a database of visitors? Get it to welcome me home?

Below is the code (mostly taken from CURL examples) to obtain the images from a network location and an example of the OpenCV HoG detector.

struct memoryStruct {

char *memory;

size_t size;

};

static void* CURL_realloc(void *ptr, size_t size)

{

/* There might be a realloc() out there that doesn't like reallocing

NULL pointers, so we take care of it here */

if(ptr)

return realloc(ptr, size);

else

return malloc(size);

}

size_t WriteMemoryCallback (void *ptr, size_t size, size_t nmemb, void *data) {

size_t realsize = size * nmemb;

struct memoryStruct *mem = (struct memoryStruct *)data;

mem->memory = (char *)

CURL_realloc(mem->memory, mem->size + realsize + 1);

if (mem->memory) {

memcpy(&(mem->memory[mem->size]), ptr, realsize);

mem->size += realsize;

mem->memory[mem->size] = 0;

}

return realsize;

}

Mat getNewFrameFromAndroid(CURLcode res, memoryStruct buffer, CURL *curl) {

while (true) {

// set up the write to memory buffer

// (buffer starts off empty)

buffer.memory = NULL;

buffer.size = 0;

curl_easy_setopt(curl, CURLOPT_URL, "http://192.168.0.8:8080/shot.jpg");

// tell libcurl where to write the image (to a dynamic memory buffer)

curl_easy_setopt(curl,CURLOPT_WRITEFUNCTION, WriteMemoryCallback);

curl_easy_setopt(curl,CURLOPT_WRITEDATA, (void *) &buffer);

// get the image from the specified URL

res = curl_easy_perform(curl);

// decode memory buffer using OpenCV

cv::Mat imgTmp;

imgTmp = cv::imdecode(cv::Mat(1, buffer.size, CV_8UC1, buffer.memory), CV_LOAD_IMAGE_UNCHANGED);

if (!(imgTmp.empty())) {

return imgTmp;

}

}

}

HOGDescriptor hog; hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector()); vector<Rect> found; hog.detectMultiScale(img, found, 0, Size(8,8), Size(32,32), 1.05, 2);

Monday 15 July 2013

BMVA 2013 Summer School Review

So after a much needed weekend of recuperation from the BMVA 2013 Summer School held at

The University of Manchester it's time to get back to work, but before I do here's a (very) quick summary/review of the summer school.

15:30 - Poster Session - Amazingly, I won the best poster prize! I received an Amazon voucher and a Computer Vision book (Computer and Machine Vision: Theory, Algorithms, Practicalities by E. R. Davies - Link or on Kindle)

Poster at the bottom...

11:00 - Low Level Vision

13:30 - Presentation from +Tomos Williams at Image Metrics - Very impressive results (Video)

15:30 - Graphical Models for Chains, Trees and Grids

17:00 - Quantitative Probability - quite a dry topic for 5pm on Tuesday with the Sun belting in...

13:30 - Motion & Tracking

15:30 - Performance Evaluation

17:00 - Vision Algorithms

11:00 - Machine Learning for Computer Vision - Presented by my PhD supervisor, speaking to the other students they consistently agreed that this was the best lecture of the week.

13:30 - Local Feature Descriptors

15:30 - Structure from Motion - Covered the basics of camera calibration and SfM well with some very impressive state of the art results. Very useful for my work.

17:00 - Real-time Vision - Some useful info about optimisation and things to consider when trying to make a system real-time.

11:00 - From Lab to Real-World - Some interesting info about non-linear solvers, presented by +Andrew Fitzgibbon from Microsoft Research.

The University of Manchester it's time to get back to work, but before I do here's a (very) quick summary/review of the summer school.

|

| Me: Back row, second from right. Image taken from the facebook group. |

What is it?

It is a Summer School aimed at PhD students in their 1st year of study in Computer Vision and Machine Learning. It was attended by 40+ students, mostly from across the UK and some from further afield. The week was crammed with lectures and a couple of lab sessions. Schedule follows...Day 1:

13:30 - Image Formation15:30 - Poster Session - Amazingly, I won the best poster prize! I received an Amazon voucher and a Computer Vision book (Computer and Machine Vision: Theory, Algorithms, Practicalities by E. R. Davies - Link or on Kindle)

Poster at the bottom...

Day 2:

09:00 - Biological Vision11:00 - Low Level Vision

13:30 - Presentation from +Tomos Williams at Image Metrics - Very impressive results (Video)

15:30 - Graphical Models for Chains, Trees and Grids

17:00 - Quantitative Probability - quite a dry topic for 5pm on Tuesday with the Sun belting in...

Day 3:

09:00 - 3D Object Reconstruction - Matlab based lab session.13:30 - Motion & Tracking

15:30 - Performance Evaluation

17:00 - Vision Algorithms

Day 4:

09:00 - Shape & Appearance Models11:00 - Machine Learning for Computer Vision - Presented by my PhD supervisor, speaking to the other students they consistently agreed that this was the best lecture of the week.

13:30 - Local Feature Descriptors

15:30 - Structure from Motion - Covered the basics of camera calibration and SfM well with some very impressive state of the art results. Very useful for my work.

17:00 - Real-time Vision - Some useful info about optimisation and things to consider when trying to make a system real-time.

Day 5:

09:00 - Matlab Lab11:00 - From Lab to Real-World - Some interesting info about non-linear solvers, presented by +Andrew Fitzgibbon from Microsoft Research.

Summary

It was a cracking week, lots of learning and most of all meeting some great people all at about the same stage of their research. Some lectures were a bit hit and miss - it's probably quite hard to pitch a good lecture to so many different knowledge levels and areas of interest.

I personally, and I know others did too, got more from the week by meeting other students and finding out about their work by chatting in the bar over a G&T.

BMVA 2013 Poster

|

| The Poster... |

Wednesday 26 June 2013

Logging Software Done! (Almost)

Ok, so it has been a while since my last post. I've been working on getting some robust logging software working on the logging PC. The software is designed to capture from multiple +Point Grey Research cameras at 1280x960 15fps, a +PrimeSense P1080 depth camera at 15fps, a Phidget IMU and a GPS receiver.

The outline of the program is a multithreaded logger that uses 4 CPU threads (done with OpenMP)

Thread 0 - Point Grey image acquisition and writing data to disk

Thread 1 - Primesense P1080 Depth and Colour image acquisition

Thread 2 - Read new Phidget data

Thread 3 - Read new GPS data

The most important part of the data is the multi-viewpoint camera data from the Point Grey Cameras as synchronisation between these images is key to accurate reliable 3D reconstruction. As soon as all cv::Mat objects from each camera have returned, Thread 0 writes the image data from each camera as well as writing the image data from the primesense camera and logging the phidget and GPS info to CSV files.

A neat feature of the logger is the dynamic delay function that aims to keep a maximum frame rate.

A timer is started at the beginning of image acquisition and stopped at the end of all the file writing. If this processing time is less than the desired frame rate of 66.67ms (15fps) then a dynamic delay is calculated simply as SleepTime = MAX(DesiredDelay-ProcessingTime,2); cv::waitKey(SleepTime); This dynamic delay means the program doesn't query the camera faster than it's configured for and gives us a nice steady frame rate. The MAX(n,2) protects against -'ve' time values and means it always pauses for at least 2ms to allow for video buffer to draw to frames to the screen. Obviously if the ProcessingTime is >= DesiredDelay then it is not possible to obtain the selected frame rate and the program just runs as fast as it can.

There is still a problem that is unresolved. The USB GPS receiver appears to flood the USB bus. Without the GPS connected, two USB Point Grey FireFly cameras happily work at 1280x960 15fps. When the GPS is added to the system it causes errors on the bus causing frames to be dropped. Now the really odd thing is these errors only appear to happen when the cameras auto adjust exposure. Fixing the shutter and gain values programmatically solves the issue. However this isn't a viable solution as the cameras are going to be mounted on a vehicle that will be driven through scenes that have wildly varying lighting conditions.

This image shows the output of three different cameras with identical fixed shutter and gain values. The top left is the high spec Flea2 and the top right/lower left are the lower cost FireFly cameras. It's interesting to note that the Flea2 has wider spectral sensitivity as it can see the structured light pattern from the PrimeSense camera.

In this one we can see a boat load of errors coming from cameras 1-2, the FireFly cameras.

Here the camera shutter and gain values have been fixed independently from one another. While this looks good, it is not a solution due to the varying lighting conditions it will operate it.

It is almost complete. Just waiting to get a RS-232 GPS device to remove the USB bus from the equation and hopefully that'll just work...

|

| Spot the devices... |

Thread 0 - Point Grey image acquisition and writing data to disk

Thread 1 - Primesense P1080 Depth and Colour image acquisition

Thread 2 - Read new Phidget data

Thread 3 - Read new GPS data

The most important part of the data is the multi-viewpoint camera data from the Point Grey Cameras as synchronisation between these images is key to accurate reliable 3D reconstruction. As soon as all cv::Mat objects from each camera have returned, Thread 0 writes the image data from each camera as well as writing the image data from the primesense camera and logging the phidget and GPS info to CSV files.

A neat feature of the logger is the dynamic delay function that aims to keep a maximum frame rate.

A timer is started at the beginning of image acquisition and stopped at the end of all the file writing. If this processing time is less than the desired frame rate of 66.67ms (15fps) then a dynamic delay is calculated simply as SleepTime = MAX(DesiredDelay-ProcessingTime,2); cv::waitKey(SleepTime); This dynamic delay means the program doesn't query the camera faster than it's configured for and gives us a nice steady frame rate. The MAX(n,2) protects against -'ve' time values and means it always pauses for at least 2ms to allow for video buffer to draw to frames to the screen. Obviously if the ProcessingTime is >= DesiredDelay then it is not possible to obtain the selected frame rate and the program just runs as fast as it can.

There is still a problem that is unresolved. The USB GPS receiver appears to flood the USB bus. Without the GPS connected, two USB Point Grey FireFly cameras happily work at 1280x960 15fps. When the GPS is added to the system it causes errors on the bus causing frames to be dropped. Now the really odd thing is these errors only appear to happen when the cameras auto adjust exposure. Fixing the shutter and gain values programmatically solves the issue. However this isn't a viable solution as the cameras are going to be mounted on a vehicle that will be driven through scenes that have wildly varying lighting conditions.

This image shows the output of three different cameras with identical fixed shutter and gain values. The top left is the high spec Flea2 and the top right/lower left are the lower cost FireFly cameras. It's interesting to note that the Flea2 has wider spectral sensitivity as it can see the structured light pattern from the PrimeSense camera.

|

| Sort of good... |

|

| ...not so good... |

|

| ...sort of good. |

Labels:

3d mapping,

computer vision,

cranfield,

data logging,

kinect,

mapping,

opencv,

openmp,

pcl,

phd,

point grey,

primesense,

robotics,

stereo vision

Location:

Cranfield, Central Bedfordshire MK43, UK

Friday 31 May 2013

Getting started with OpenMP

In the previous posts, about creating logging software for the boat trial, I mentioned the fact we were logging GPS and stereo images. This was done using OpenMP to parallelise the processing so that the program wasn't hanging around for a GPS update causing delay between image samples.

This is a quick guide on how to get OpenMP going.

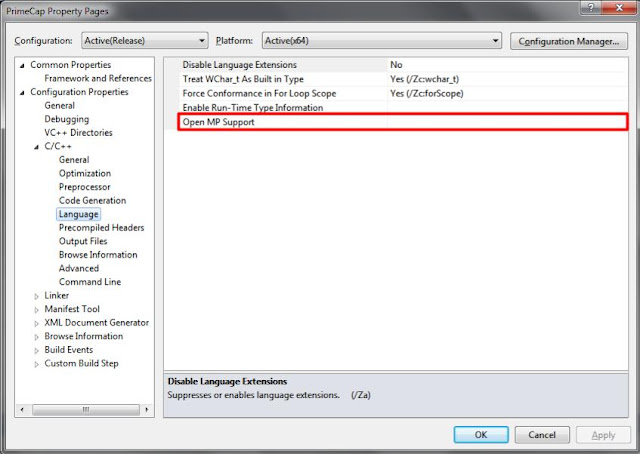

My first mistake was thinking it was something that needed to be installed and configured. It's just a switch in Visual Studio 2010. Go to the project properties page. C/C++ > Language > OpenMP Support > Yes.

Now you'll be amazed how easy it is to get a simple program working that uses two cores...Here's a snippet from the logger that capture GPS data using 1 core and images from a camera using the other. I've highlighted the important lines of code and briefly outlined what their functions are.

- Make sure to #include <omp.h>

- The line #pragma omp parallel sections shared(GPSData) enables the data structure, GPSData, to be accessible from either thread.

- The lines #pragma omp section define the code that is to run on an individual thread

- GPSData gets populated from the call GPS.update(GPSData, FORCE_UPDATE)

- Since we initialised all the values to 0 with SerialGPS::GPGGA GPSData={}; it doesn't matter if the camera thread gets to reading the data before the GPS update thread has populated the structure with any meaningful values.

- As soon as the the GPS thread receives a valid signal and updates GPSData accordingly this data is accessible from the camera thread.

- Note that while both threads loop while i<100, this i value is local to each thread. It's very likely that one thread will finish before the other as no synchronization is taking place here, we're just offloading the GPS updates so that it doesn't interfere with the image sampling and allows use to simply tag an image with the latest good GPS values.

This example broadly gives you an idea of how to use two threads and share a variable between them.

There's a handy OpenMP cheatsheet here (http://openmp.org/mp-documents/OpenMP3.1-CCard.pdf)

This is a quick guide on how to get OpenMP going.

My first mistake was thinking it was something that needed to be installed and configured. It's just a switch in Visual Studio 2010. Go to the project properties page. C/C++ > Language > OpenMP Support > Yes.

#include <iostream>

#include <stdio.h>

#include <string>

#include <Windows.h>

#include "SerialGPS.h"

#include <omp.h>

#include <opencv/cv.h>

#include <opencv/highgui.h>

using namespace std;

int main( int argc, char** argv )

{

//set up the com port

SerialGPS GPS("COM6",4800);

SerialGPS::GPGGA GPSData={};

if (!GPS.isReady()) { return 0; }

cout << "GPS Connected Status = " << GPS.isReady() << endl;

int gpsReadCounter=0;

//create the video capture object

cv::VideoCapture cap1;

cap1.open(0);

cv::Mat Frame;

//define the shared variables between threads

#pragma omp parallel sections shared(GPSData) {

//////// FIRST CPU THREAD ////////

#pragma omp section

{

for (int i = 0; i<100; i++) {

cap1 >> Frame;

cv::imshow("Cam",Frame);

cv::waitKey(33);

cout << " GPS Data Time = " << GPSData.GPStime << endl;

}

}

//////// SECOND CPU THREAD ////////

#pragma omp section

{

for (int i = 0; i<100; i++) {

if (GPS.update(GPSData, FORCE_UPDATE)) {

cout << " T=" << GPSData.GPStime << " La=" << GPSData.Lat << " Lo=" << GPSData.Lon << endl;

} else {

cout << "Error Count = " << GPS.getErrorCount() << " ";

GPS.displayRAW();

cout << endl;

}

}

}

}

}

- Make sure to #include <omp.h>

- The line #pragma omp parallel sections shared(GPSData) enables the data structure, GPSData, to be accessible from either thread.

- The lines #pragma omp section define the code that is to run on an individual thread

- GPSData gets populated from the call GPS.update(GPSData, FORCE_UPDATE)

- Since we initialised all the values to 0 with SerialGPS::GPGGA GPSData={}; it doesn't matter if the camera thread gets to reading the data before the GPS update thread has populated the structure with any meaningful values.

- As soon as the the GPS thread receives a valid signal and updates GPSData accordingly this data is accessible from the camera thread.

- Note that while both threads loop while i<100, this i value is local to each thread. It's very likely that one thread will finish before the other as no synchronization is taking place here, we're just offloading the GPS updates so that it doesn't interfere with the image sampling and allows use to simply tag an image with the latest good GPS values.

This example broadly gives you an idea of how to use two threads and share a variable between them.

There's a handy OpenMP cheatsheet here (http://openmp.org/mp-documents/OpenMP3.1-CCard.pdf)

Wednesday 29 May 2013

A "quick" Side Project

Since the last post I had my 9 month PhD review, it went pretty smoothly, passed with no problems :)

Shortly after I was asked by my project supervisor if I could help out quickly on another project. I'm always keen to work on a variety of projects.

The task was to simply pull together some already existing code to create a data logging program that logs stereo image from the +Point Grey Research bumblebee camera and log GPS positions, all this was going a remotely operated boat for the +Environment Agency.

I wrote a little C++ class to connect to a COM port and read off GPS data. This worked fine. The bumblebee however proved to be more of a challenge. I consulted a Masters student who had used it more than I had to obtain some code from him to read the images. The logging laptops are +Dell 6400 ATG's, theses are rugged laptops, perfect for use on the boat. Fresh installs of Win7 x64 were on these machines as they'd been hacked to pieces (not in the literal, more in the coding sense) in the past for various projects. So with the code working on a 32bit WinXP machine we ported the source over to +Microsoft Visual Studio (Link) in Win7 installed all the relevant +Point Grey Research drivers and..... nothin'.... 2 days went by of trying every sodding combination of drivers and libraries but none of the laptops were having any of it under x64. So we binned it and went back to trusty old XP.

We had hoped to get out onto the river yesterday (28/05/13) but a morning re-coding session to log grayscale stereo rectified images instead of colour un-rectified images, delays from +Leica Geosystems AG in obtaining a theodolite, problems with a bluetooth 5Hz GPS system that needs a power cycle if something tries to connect at the incorrect baud rate and not forgetting the British weather pissing it down all afternoon.

As it was getting on for 6pm by the time everything was up and running we postponed the data logging day until tomorrow (30/05/13). Stay tuned for more....

Shortly after I was asked by my project supervisor if I could help out quickly on another project. I'm always keen to work on a variety of projects.

The task was to simply pull together some already existing code to create a data logging program that logs stereo image from the +Point Grey Research bumblebee camera and log GPS positions, all this was going a remotely operated boat for the +Environment Agency.

I wrote a little C++ class to connect to a COM port and read off GPS data. This worked fine. The bumblebee however proved to be more of a challenge. I consulted a Masters student who had used it more than I had to obtain some code from him to read the images. The logging laptops are +Dell 6400 ATG's, theses are rugged laptops, perfect for use on the boat. Fresh installs of Win7 x64 were on these machines as they'd been hacked to pieces (not in the literal, more in the coding sense) in the past for various projects. So with the code working on a 32bit WinXP machine we ported the source over to +Microsoft Visual Studio (Link) in Win7 installed all the relevant +Point Grey Research drivers and..... nothin'.... 2 days went by of trying every sodding combination of drivers and libraries but none of the laptops were having any of it under x64. So we binned it and went back to trusty old XP.

We had hoped to get out onto the river yesterday (28/05/13) but a morning re-coding session to log grayscale stereo rectified images instead of colour un-rectified images, delays from +Leica Geosystems AG in obtaining a theodolite, problems with a bluetooth 5Hz GPS system that needs a power cycle if something tries to connect at the incorrect baud rate and not forgetting the British weather pissing it down all afternoon.

|

| Student with his boat... finally. |

Tuesday 7 May 2013

Getting back to science...

So now the 9 month review report has been handed in I can get back to doing science. Before tackling the review report this is the stage I had reached. A 3D map of the DIP (Digital Image Processing) Lab at Cranfield University (albeit, a noisy one). It is a simple reproduction created using a stereo vision algorithm and visual odometry algorithm.

The process is...

- Create disparity depth map from stereo camera

- Calculate camera trajectory from the visual odometry

- Add point clouds to a global map with an appropriate offset calculated from the trajectory.

It's good... but there's lots of work to do yet...

The equation for converting disparity map values into real world depth is quoted in a ton of papers/books but I could not actually see where it was derived from so I worked it out for the review report for completeness. I'm posting it up on here so that it might help someone else.

Above is a diagram of the triangulation theory. We need to know a few parameters about the setup before we can triangulate from a disparity map image to real world depth.

We need :

- The focal length of the cameras (f) (ideally they would be the same)

- The baseline of the stereo rig (B)

So use the triangle ratios to get...

$\frac{P_{L}}{f}=\frac{X_{L}}{Z}\qquad\frac{P_{R}}{f}=\frac{X_{R}}{Z}$

...rearrange to get...

$X_{R}=\frac{ZP_{R}}{f}\qquad X_{L}=\frac{ZP_{L}}{f}$

...add them together to get the baseline...

$B=\frac{ZP_{R}}{f}+\frac{ZP_{L}}{f}=\frac{Z\left(P_{R}+P_{L}\right)}{f}$

...substituting disparity as d=Pl-Pr by defining Pr as a -ve ...

$Z=\frac{fB}{d}$

Edit

10:35 22/5/13

I have no idea why but the latex equations have stopped rendering...

The addition of the javascript used to kill the Google plus comments box at the expense of the latex equations. Now it's just killing everything... arse.

10:38 22/5/13

oh no wait the comments box is back....wtf.

14:09 1/7/13

It looks like using the dynamic page template in Blogger kills the latex script.

Labels:

3d mapping,

computer vision,

data logging,

opencv,

PC,

pcl,

phd,

point grey,

primesense,

research,

robotics,

stereo vision,

triangulation

Monday 29 April 2013

New toys...

Just a quick post. Received a new shiny +PrimeSense PS1080 Structured Light 3D camera today.

This little fella is going to be mounted on the Robotic logging platform and replace the Kinect that's currently installed. The advantage with using this is instead of the kinect is:

1 - The mount it more sturdy

2 - It's a smaller

3 - Can be powered directly through the USB

4 - Hopefully it'll also use less bandwidth (see previous post for Kinect bandwidth issues http://what-the-pixel.blogspot.co.uk/2013/04/logging-pc-v2.html)

I was hoping to just plug-and-play with my previous logging software but alas it didn't work. I think +OpenCV needs to be recompiled for it to work.

I'll probably post a walkthrough on here when I come to getting this thing working.

Friday 26 April 2013

Logging PC v2

My previous post was a quick update about building a custom PC for logging purposes. This was going well until I came to actually trying to log some data. Using two +Point Grey Research cameras and the Microsoft Kinect (+Kinect for Developers) I quickly found the problem again that prompted me to build the logging PC.

Let me rewind.... In the post (http://what-the-pixel.blogspot.co.uk/2013/03/data-collection-with-wall-e-v00001.html) I outlined the set-up of the logging robot to capture stereo images and Kinect ground truth. Using my main development laptop ( +Samsung) I was able to log stereo images, point cloud data and kinect RGB images no problems. I transferred the logging code over to the ruggedised +Dell laptop. Hmmm this is where I ran into problems... it didn't work. The +Point Grey Research cameras were only returning partial images. I concluded that the +Dell laptop had all of its USB ports on the same bus and it couldn't cope with the data rates, bugger!

That brings me to the dedicated logging PC. It's small, low power, capacity for extra cards via the PCIe and 2x mini-PCIe ports, not to mention the plethora of 4x USB3.0 ports on the rear I/O plate and the 5x USB2.0 headers on the motherboard. Coupled with 6GB of RAM a Core i5 (3470-T @ 35W I think) and a decent, yet smallish, 320GB SpinPoint, this was looking like a tasty little logger!

So back to the original problem... it still didn't work! After some reasearch I found this nugget of information.

- If the Kinect is plugged into a USB 3.0 port, plug it into a USB 2.0 port instead.

- Make sure the Kinect is plugged directly into a USB 2.0 port, and not through an external USB hub.

- Since a Kinect requires at least 50% of the USB bandwidth available, make sure that the Kinect does not share the USB controller with any other devices.

So after finding that, I left the +Point Grey Research cameras plugged into two USB3.0 ports on the I/O plate and switched the Kinect to one of the USB2.0 motherboard headers. Blast! it still wasn't working. I'll try the other header.... nope still rogered! There's 1 left, not holding out much hope but lets give it a shot.... finally, it's working! I'm not entirely sure why this header would be a different controller, but it's working at the moment which is progress.

If I have time next week I might throw linux onto it and see if it's a OS limitation that I'm banging my head against.

Here are some pics of the USB header mods I made to the case in order to added more ports.

|

| The mounts on the USB port perfectly aligned with the case holes. Just a shame I scratched the case with the +Dremel Europe as I was cutting... I'm sure it won't be the only war wound it gets. |

|

| This one is now labeled as the Kinect port! |

|

| Thought I'd add RS-232 while I was at it, seeing as the robot can be controlled via it and the motherboard had a header for it. |

|

| The only Kinect friendly header.....WHY!?... |

|

| Back together (again) but with upgrades :) |

Tuesday 23 April 2013

New logging PC

Quick update: just built a shiny new logging pic for the robot platform. It's based on an +Intel DQ77KB board with an i5 CPU.

Tuesday 16 April 2013

Car Camera Rig Construction

I'm coming up to the 9 month PhD review so a lot of my time is being spent writing a report for that. Although I spent the weekend writing a C++ class for serial GPS reading, it's a bit of 're-inventing the wheel' as there are libs available but it was actually easier just to write my own. I know it works with my GPS receiver and I'm getting exactly the data I want in the form I want. Nothing works as well as your own code. Once it's a bit more mature and extracting more information I'll pop it online somewhere, in the event someone else finds it's useful.

In the meantime I spent today redesigning the extruded aluminium frame that will hold the camera array and attach to a roof-rack. The items in question were purchased from http://www.valuframe.co.uk/. Just as an aside, one of the bars was a bit misshapen when it arrived, but we did receive an extra 2m of bar and a couple extra connectors...swings & roundabouts.

I initially constructed the frame in a symmetrical manner (see crude sketch). Two bars joined at the end with a 90° bracket and a corner plate (blue) to add rigidity, repeat for all corners. This worked nicely, it was strong and didn't use too many parts.....however it does restrict two channels in the aluminium bar. To access them to add more components would mean taking apart one of the corners, as the keyed inserts have to be slid along from the end.

A redesign was needed...... (this is quite difficult to draw in 2D) but basically any given channel cannot have both ends used. So the bars and mounts have to alternate which side they connected to.

Voila! Not very exciting... but I think it's a neat solution. Every channel is accessible from from at least 1 end, therefore components can be added to any free portions of the bars.

In the meantime I spent today redesigning the extruded aluminium frame that will hold the camera array and attach to a roof-rack. The items in question were purchased from http://www.valuframe.co.uk/. Just as an aside, one of the bars was a bit misshapen when it arrived, but we did receive an extra 2m of bar and a couple extra connectors...swings & roundabouts.

I initially constructed the frame in a symmetrical manner (see crude sketch). Two bars joined at the end with a 90° bracket and a corner plate (blue) to add rigidity, repeat for all corners. This worked nicely, it was strong and didn't use too many parts.....however it does restrict two channels in the aluminium bar. To access them to add more components would mean taking apart one of the corners, as the keyed inserts have to be slid along from the end.

A redesign was needed...... (this is quite difficult to draw in 2D) but basically any given channel cannot have both ends used. So the bars and mounts have to alternate which side they connected to.

Tuesday 26 March 2013

Using KinCap

This entry is mainly for myself so I can remember what to do to install the Kinect drivers and Point Grey Research drivers.

- FlyCapture2_x86.exe

- openni-win32-1.5.4.0-dev.exe

- nite-win32-1.5.2.21-dev.exe

- SensorKinect092-Bin-Win32-v5.1.2.1.exe

- sensor-win32-5.1.2.1-redist.exe

When installing the FireCapture driver DONT just click next, next, next... otherwise you'll install the USB3 drivers and they'll prevent any other device working on that bus :/

Also ensure you untick the bit that asks to 'let flyCapture manage CPU idle states'..... don't let it!, it's rubbish, It wouldn't throttle down my CPU :(

Also to use the KinCap tool you'll need OpenCV 2.4.3 compiled for the Kinect and PCL 1.6.0 installed.

- FlyCapture2_x86.exe

- openni-win32-1.5.4.0-dev.exe

- nite-win32-1.5.2.21-dev.exe

- SensorKinect092-Bin-Win32-v5.1.2.1.exe

- sensor-win32-5.1.2.1-redist.exe

When installing the FireCapture driver DONT just click next, next, next... otherwise you'll install the USB3 drivers and they'll prevent any other device working on that bus :/

Also ensure you untick the bit that asks to 'let flyCapture manage CPU idle states'..... don't let it!, it's rubbish, It wouldn't throttle down my CPU :(

Also to use the KinCap tool you'll need OpenCV 2.4.3 compiled for the Kinect and PCL 1.6.0 installed.

Thursday 21 March 2013

Data Collection with WALL-E v0.0001

About 3 weeks ago (1/3/13) I configured the robot we have in the lab with a bunch of +Manfrotto Imagine More tripod parts to enable mounting of 2 +Point Grey Research FireFly cameras and a +Microsoft Kinect. The Kinect will be gathering ground truth 3D mapping data and the FireFly cameras will gather stereo images which will be used to construct the scene offline in attempts to generate a 3D model of the scene in front of the robot. I wrote some custom logging software in C++ using OpenCV, +PointCloudLibrary and the various SDK's needed to talk to the Kinect and FireFly cameras.

|

| Front View Layout 1: Using the Manfrotto gripping arm to hold the Kinect as the base mount of the Kinect is susceptible to vibration. |

|

| Rear View Layout 1: Single arm holding Kinect steady. |

|

| Front View Layout 2: Two extension arms mounted on either end of the bar providing two anchor points for the Kinect. |

|

| Top-Front View Layout 2: View from above with logging laptop mounted on the rear of the robot with anti-vibration padding. |

Labels:

3d mapping,

computer vision,

kinect,

opencv,

pcl,

point grey,

robotics

Location:

Cranfield, Central Bedfordshire MK43, UK

Wednesday 20 March 2013

Subscribe to:

Posts (Atom)

.png)